Assistant XiaoRui Deployment Manual

Prerequisites

- The customer is required to provide the API endpoints for calling the LLM. This means the customer can either deploy their own LLM within their own environment or use an LLM provided by cloud vendors (e.g., Volcano Engine, Baidu Cloud Platform, Alibaba Cloud Platform), as long as they can provide the necessary API details such as the endpoint URL and key. These APIs must comply with the OpenAI specification, which is widely adopted by common LLMs domestically and internationally. Below is an example using Volcano Engine's DeepSeek-R1 API, showing the endpoint URL and key.

model.platform=volcengine

model.name=deepseek-r1-250528

model.api.key=abc123(use your own key)

model.base.url=https://ark.cn-beijing.volces.com/api/v3

- The customer is also required to provide API endpoints for both the Embedding model and the ReRanker model. The Embedding model is essential for converting text into embedding vectors, while the ReRanker model is used to reorder search results during knowledge base retrieval, thereby improving accuracy. Similarly, for these two models, the customer can either deploy them in their own environment or use the model APIs provided by cloud vendors (e.g., Volcano Engine, Baidu Cloud Platform, Alibaba Cloud Platform). Internally, we use the Qwen3-Embedding-4B model for embeddings and the bge-reranker-v2-m3 model for reranking. Customers may choose their own Embedding and ReRanker models, but please note that using different models may impact the final accuracy.

- Two services need to be deployed: the chat-service and the milvus-service. Both services support deployment in Docker environments and Kubernetes. The required resources for chat-service + milvus-service are a total of two servers with 8 vCPUs and 16 GB RAM each. When deploying milvus-service, only a single node is deployed. It is recommended to use SSD storage, as using HDD may negatively affect query performance.

Deployment Steps

- The deployment process for the chat-service and milvus-service is consistent with the deployment methods of other company components and can be directly deployed using Ansible.

- Ansible Deployment Steps:

- Prepare the Ansible environment and obtain the Ansible package: Contact the Architecture Department or download the Ansible package containing the

chat-serviceandmilvus-servicecomponents from the resource download platform (e.g.,one_chat_service_X.X.X.X_increment.tar.gz). - Upload and extract the package: e.g.,

tar -xvf one_chat_service_3.3.1.0_increment.tar.gz -C /data/ansible - Modify deployment parameters: Edit the content in

hosts.inito specify the machine IPs for deployingchat-serviceandmilvus-service. - Modify the

all.ymlfile: Set thechat_serviceversion number in/data/ansible/gaea/group_vars/all.yml(it must match the version packaged by the DevOps platform). Sometimes this version is pre-determined and requires no modification. - Execute the deployment command: Navigate to the Ansible

bindirectory and run the installation command:sh br.sh --install -t chat_service -S -vvv - Verify service status after deployment:

docker ps -a | grep chat_service# Confirm the container is running normally.

-

The specific steps may vary depending on the

chat-serviceversion. It is necessary to confirm the version and detailed steps with the AI team before deployment. -

Since LLM root cause analysis relies on data such as call chains, logs, and alerts, it has dependencies on components like APM, RUM, Log, and Alert. Different

chat-serviceversions may depend on different versions of these other components. Dependencies on other components must be confirmed with the AI team. -

It is recommended to deploy the

milvus-servicefirst, followed by thechat-service. -

Post-Deployment Service Health Check:

-

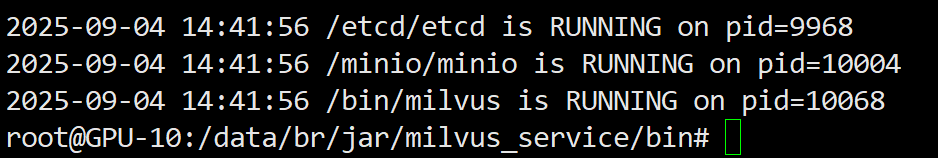

After deploying milvus-service, access the container using

docker exec -it br-milvus-service bash. Inside the container, run the commandbash milvus_service.sh 6. If all displayed processes show a status of RUNNING, the service is functioning normally, as shown in the example below:

-

After deploying chat-service, access the container using

docker exec -it br-chat-service bash. Inside the container, run the commandbash chat_service.sh 6. If all displayed processes show a status of RUNNING, the service is functioning normally. (The output is similar to the example above).

-

Configure LLM Parameters Post-Deployment

- After deploying the chat-service and milvus-service, the first step is to configure the API endpoints for the LLM, Embedding model, and ReRanker model.Configuration Method:Modify the content of the chat-service's private configuration in NACOS (Default: CHAT_SERVICE, which may be subject to change).

-

Configure the primary LLM API parameters.

model.platform=volcengine

model.name=deepseek-r1-250528

model.api.key=abc123(use your own key)

model.base.url=https://ark.cn-beijing.volces.com/api/v3 -

Configure the secondary LLM API parameters: (The secondary LLM is typically a faster alternative model. If unavailable, it can be set to the same as the primary LLM above.)

# Secondary LLM Configuration

sub.model.platform=volcengine

sub.model.name=doubao-seed-1-6-flash

sub.model.api.key=(use your own key)

sub.model.base.url=https://ark.cn-beijing.volces.com/api/v3

# Third-party LLM Configuration (If unavailable, it can be configured to be the same as the primary LLM above.)

third.model.platform=volcengine

third.model.name=doubao-seed-1-6-flash

third.model.api.key=(use your own key)

third.model.base.url=https://ark.cn-beijing.volces.com/api/v3 -

Configure the API endpoints for the Embedding model and ReRanker model.

# Embedding Model Configuration

embedding.model.name=br-embedding(use your own name)

embedding.model.key=(use your own key)

embedding.model.url=http://ip:port/v1(use your own url)

# Rerank Model Configuration

rerank.model.name=br-rerank(use your own name)

rerank.model.key=(use your own key)

rerank.model.url=http://ip:port(use your own url)

- After the configuration is complete, restart the service using the command: docker restart br-chat-service.